Batch Normalization Increases Adversarial Vulnerability: Disentangling Usefulness and Robustness of Model Features

Abstract

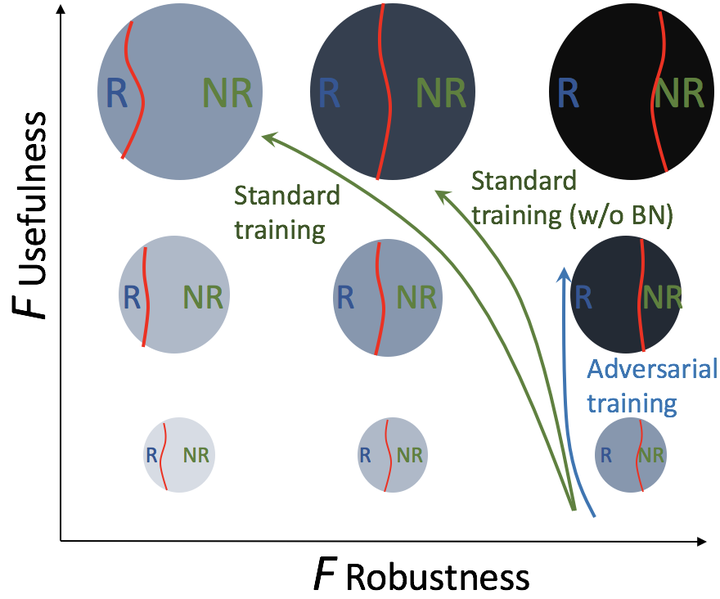

Batch normalization (BN) has been widely used in modern deep neural networks (DNNs) due to fast convergence. BN is observed to increase the model accuracy while at the cost of adversarial robustness. We conjecture that the increased adversarial vulnerability is caused by BN shifting the model to rely more on non-robust features (NRFs). Our exploration finds that other normalization techniques also increase adversarial vulnerability and our conjecture is also supported by analyzing the model corruption robustness and feature transferability. With a classifier DNN defined as a feature set F we propose a framework for disentangling F robust usefulness into F usefulness and F robustness. We adopt a local linearity based metric, termed LIGS, to define and quantify F robustness. Measuring the F robustness with the LIGS provides direct insight on the feature robustness shift independent of usefulness. Moreover, the LIGS trend during the whole training stage sheds light on the order of learned features, i.e. from RFs (robust features) to NRFs, or vice versa. Our work analyzes how BN and other factors influence the DNN from the feature perspective. Prior works mainly adopt accuracy to evaluate their influence regarding F usefulness, while we believe evaluating F robustness is equally important, for which our work fills the gap.